Hi all,

An update from my side: My custom RBG light (above) that was under development is up and running, including an overhaul of my rig:

I purchased a simple metal box that has a 55x55 mm^2 opening in it’s lid to mount the light:

The opening will allow for 35mm and MF scanning. A metal box, since the RGB matrix will get hot in use, and internal reflections will help getting the output up and homogeneous.

Mounting process and tests plus adding an opal glass diffuser (pics speak for themselves):

Ready and final test:

Mounted in upgraded rig:

Rig can be on a tripod, but simply on my desk is more compact and convenient.

Rig uses Nikon Df with 105 2.8 macro with AF calibrated at 1-1.1 magnification which enables AF on each shot. The 105/2.8 lens has two threads: An aluminum hood mounts on the 62 mm outer thread, which is static, while internally the inner tube of the lens with 52mm thread can move in and out freely to focus. The hood has a front thread of 67 mm, on which a reducer 67-52mm is mounted, followed by a stack of lightweight 52 mm rings (from Nikon macro extension tubes from the 1960-1970 era). This stack is ended with an old 52mm filter from which the glass was removed, which then adapts to the bellows of a Nikon PS6 slide copy adapter. The camera can be moved up/down and sideways to align. The custom RGB light is mounted at the end, and can slide back and forth to adjust the distance. A custom “duster” is created from two record player antistatic carbon fiber brushes.

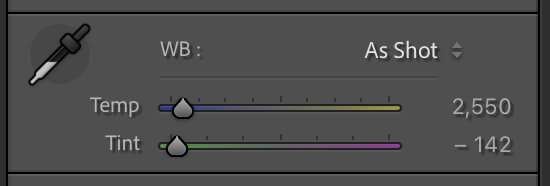

Tests showed that indeed the light provides a homogeneous intensity across the 35mm frame. R, G, and B, and overall intensity of the light are individually adjustable, and are adjusted (after the light has warmed up and is stable) to create identical R, G, and B peak position and amplitudes in LRC when the light is shining through a blank part of a color negative and imported into LRC using a custom linear profile for the camera, which was created using Adobe’s DNG profile editor. I also looked with RAW digger at the RAW data, and also there the peaks are at the same position and intensity. The bandwidth of the R, G, and B LEDs are “quite narrow”. Camera white balance is either set at 5560K (daylight) or a custom WB on the light shining through a blank part of the color negative (this doesn’t seem to matter much). The imported scans are then cropped to remove non-picture areas, “auto” tone is hit in LRC while the picture is still not inverted to balance exposure levels, but “vibrance” and “saturation” adjustments from hitting “auto” are nulled to keep everything linear (i.e., only exposure is adjusted, while color balance is retained). The picture is then manually inverted by inverting the tone curves for the R, G, and B channels, while throwing away regions without pixels. A white balance is not required this way, nor is NLP (sorry). This is fast, and no further color interpretation is made since everything is kept linear (NLP wants us to set a white balance on the film border and makes non-linear inversions in the R, G, and B tone curves, which shifts color balance non-linear as far as I can see). @Nate: Please correct if my impression is wrong here).

My intent was to do everything as objective as possible (i.e. without making colors “look better” by changing the balance in a non-linear fashion), and therefore keep everything linear after I negate the orange mask in hardware with my custom RGB light, in order to let the specific tones of a used film stock come through. So far I tested on Portra 160, Portra 400, and Ektar 100 film, and indeed, the specific tones that Portra is known for seem to come out, as well as the specific bold colors that Ektar is known for. I was struggling with NLP and alternative inversion software because of the many different color interpretations of the same picture that were possible, which all might look okay or good, but I had a hard time deciding which looked best. I am well aware of statements that there is no “correct” way with color negative film, and that the color balance is a personal interpretation, but by keeping everything linear in the inversion process, and using the custom light to balance out the orange mask, I do see brilliant colors now that seem to represent what a specific film stock is known for. Whether this method is entirely correct or still an “interpretation” I don’t know.

To my surprise, I do not need/want any inversion software any longer. Manual inversion is (for me) faster since I do not have to decided between the many possible options for interpretation, and since I believe it is more accurate to extract the specific tones that a given film stock is known for (but I might be wrong). I still use NLP for B&W since it creates beautiful tones for that, but for color film, I now stay away from inversion software because of color interpretations that are outside of my control, and possibly more subjective than I can do manually while keeping everything linear. The only subjective part in my current approach is the exact position of the ends of the curves in the tone curve editor to remove a slight color cast in the shadows or highlights if I see that visually, but the curves remain linear.

Thoughts and comments welcomed…