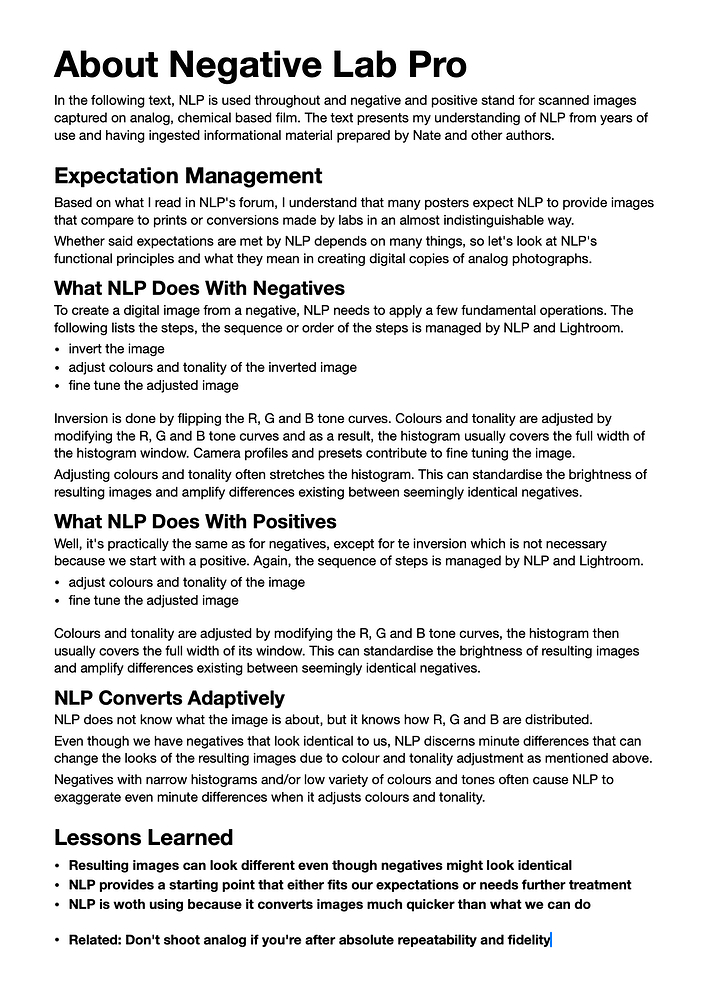

I’d like to share a few thoughts… Some have been sprinkled in a few posts that mostly deal with expectations. Here’s the condensed text. It’s an image of a text document (that I can’t attach)

Well it’s hard to define ‘fidelity’ with respect to colour negative but I would expect repeatability from a software program like this. If it’s true that “images can look different though negatives might look identical” then that’s pretty disappointing as well. Of course this is an NLP forum so we can’t even begin to discuss other options and whether this is true of them as well.

It’s really about expectations vs. reality - like in real life.

And even though mathematical operations are fairly deterministic, slight changes of input can lead to big changes as a result of the operations. NLP is simply way more perceptive than we are. Humans can distinguish differences that range around 1% (about 7 bit) and NLP deals with input that comes in 12 or more bits…way more delicate nuances that can tip the scale.

Thanks @Digitizer, but I think this is an overly simplistic explanation and isn’t really true as of the latest versions of Negative Lab Pro (v3+).

Resulting images can look different even though negatives might look identical

Using “Match” or using “Roll Analysis” you are literally a click away from making the image processing identical on the negatives (assuming they were digitized consistently).

Yes, out of the box, NLP defaults to analyzing each image independently and create the optimal settings for that specific image. It is true that this can create nuances between similar images (especially thin, under-exposed negatives), but again, it is very easy to change this to analyze groups of images together and even adjust the Roll Process to more closely imitate a “Pro Lab” output, a “Basic Lab” output, and “Darkroom Paper” output.

Of course, these need to digitized consistently for the results to be consistent. The advantage of developing software for a discreet closed system (like a Frontier Scanner) is that you know exactly what to expect and the input will be perfectly consistent. NLP needs to work with all kinds of setups, which is why I have it default to individual image analysis (as this is a more tolerant strategy). But with a perfectly consistent setup and using roll analysis, NLP should produce results that are at least as consistent and as accurate as a calibrated lab scanner.

NLP provides a starting point that either fits our expectations or needs further treatment

100%. The initial conversion is just a starting point and further editing is often helpful. (By the way, this is true of any method, including labs scans.)

**

The difference is that** NLP’s RAW workflow makes it easy to make even major changes after conversion to match your vision for the final film shot, including:

- The Underlying Film Analysis (via Roll Analysis and Match)

- Tonality (Gamma adjustments via “Brightness”, exposure, highlights, shadows, etc)

- Dynamic Range (via direct adjustments to the white clipping and black clipping point)

- Color Balance (including precise controls over shadow and highlight color balance)

- Fine Tuned LUTs

Don’t shoot analog if you’re after absolute repeatability

It’s true that there are a lot more opportunities with analog to “mess something up” somewhere along the way (from shooting film, to chemical processing, to digitization mistakes or inconsistencies).

I would just say this: The more you are willing to invest into the process of film, the more you will get out of it!

Yes, it sucks when something goes wrong with the chemical processing, or when you underexpose a negative, or when you are getting uneven light during digitization. But the reward when you get everything just right is magic!

Thank you very much for that explanation. I’m not in any way doubting the sophistication of the colour processing within NLP and yes of course the results that you get from an individual frame, particularly one that might not have very much colour information to work with, will need experience and possibly time to get right. However I’m relieved by your explanation to this and the other thread that if the original film exposures are correct then you should get exactly the same colours across a group of images of the same subject under the same light as you can in the darkroom when you keep the same filtration when printing each of the images.

Due to my inexperience I’m not entirely sure whether ‘Batch’ or ‘Roll analysis’ should be used in my hypothetical example from the other thread of the building with a bright red or bright yellow truck in front, or no truck at all, so that the building stays the same in each conversion.

I mostly concur with your more detailed description of things I left out in order to condense my thoughts to a one-pager.

There is one thing though that I’d like to point out:

If we understand “process of film” to include the capture too, we can get into the situation as seen in Inconsistent with Negative Conversion and Roll Analysis On

Looking at the histograms and metadata, I see that all images were well exposed. Nevertheless, conversions show differences that, imo, can only result from different lighting or exposures when the photos were taken. Chemical film’s light vs. density characteristics aren’t as straight as digital sensor’s characteristics. And while scanning effectively hid the differences that might be present in the original negatives, NLP accentuated the differences which, in the end, lead to the thread mentioned above.

Maybe my conclusions are wrong here, but from converting my old 645 negatives, I’ve learnt to get results that aren’t bull’s-eye every time - also because I mostly took each object once and the takes vary in time-of-day and location as well as climate…

Anyways, NLP is a great help for bulk conversions (my use case) and in most cases, further tweaks are made for art/craft reasons. The fun is in the making and NLP manages the (boring) first steps with flying colours. Thanks again for a great solution @nate ![]()

I’ll jump-in here for a sec and add my several centimes worth:

(1) The very first step before we even open-up NLP, when working with negatives, is to neutralize the orange mask using the White Balance tool in Lightroom, then cropping out everything but the image itself. This is crucial to the results of using NLP thereafter, otherwise the Black Point of the conversion will be wrong and everything else up the line ipso facto.

(2) Save for laboratory conditions of image capture, there is no such thing as accuracy in this business. The colours are more or less credible and satisfying depending, but that has nothing to do with accuracy. No application will deliver accurate results working with captures that were not meticulously controlled for accuracy.

(3) Unless one has peaked under the hood and tried playing around a bit with the individual channel curves in Lightroom resulting from an NLP conversion, it’s hard to appreciate just how sensitive the results of these curve settings are to any repositioning; minor tweaks have lots of leverage on results. Nate must have put scads of time into refining each one of the presets built-in to NLP for giving different appearances to a converted negative. Perhaps this has to do with the fact that each film was designed with its own characteristic curve which is non-linear and perhaps exponential functions influence the conversion from negative to positive with each remapping - that last bit I don’t know, it just seems so, Nate can advise.

(4) I am led to believe there are limitations in the Lightroom SDK which affect what third-party app developers can do to manage how Lightroom treats the results of using their own applications, and in this specific case would appear to affect how much insularity there can be between luminance and chroma adjustments in Lightroom when applied to NLP conversions from negative to positive.

As a guy who ran the color correction, scanning, printing, and other digital departments of a huge portrait lab, I know a thing or two about converting color negatives to positives. I’ve also been using NLP for several years, now. I think it is fantastic!

As far as expectations, a lot of folks think it should do all the work for them, perfectly, every time, just like they do when they set their digital camera on A or P and spray away. (That, too, is a poor expectation.)

All automatic systems in photography are based on some sort of averages. Meters want to see neutral gray to get exposure right. White balance systems are based on seeing neutral gray, too. NLP works similarly, in that it tries to evaluate the density and color balance of a negative, and its evaluation is affected by the tonal range and color content of scenes.

All this is to remind people that no automatic system is perfect. It will get you in the ballpark. But it is up to you to use it intelligently, to get an image the rest of the way to what you want to see.

Nate has done an outstanding job of constantly refining the tools to make them easier and quicker to use. Still, we can come up with plenty of workflow strategies to make things easier.

One of them is pretty straightforward. I used it for years as an AV producer working with slide films. It is to base exposures off an incident meter reading of a scene, or a reflected light meter reading of a gray card or other exposure target. I would set a manual ISO, shutter speed, and aperture suited to the subject matter. Then I would expose all frames in the same lighting at those settings. When the light changed, I would make another set of readings. I added filters to balance the light source, which is the rough equivalent of using manual white balance in digital capture.

That procedure gave me consistency. A similar approach can be used when converting negatives in NLP. The idea is to tweak a single frame, and apply those same settings to all the other frames exposed in the same lighting. This saves a lot of time! It is not going to help when you have 36 different subjects, each photographed under different lighting in different locations, but most of us don’t do that all the time.

In the end, NLP will nearly always get me in the ballpark of where I want to be. I’m responsible for knowing what I photographed and what it should look like, whether realistic, or as a mood image.

Indeed, we are thinking of two kinds of users…

- …converting images captured recently using current film stock.

Using sound shooting and scanning practices as mentioned above will

help NLP to produce fairly consistent conversions, be it with Roll Analysis or not.

Further tweaking for taste, style etc. rather than necessity. - …converting images captured in the past and on film stock available there and then.

Using sound scanning practices (also as outlined in the GUIDE) will

help NLP to produce results with less variation, depending on the images’ content.

Further tweaking is often necessary, tweaking for taste, style etc. is still possible though.

While converting manually is possible, it takes a lot of time, less with B&W. Migrating an archive of images captured on film into the digital age takes a lot of time and effort. Using NLP saves a lot of time and effort and tweaking will be mostly for taste - which is the interesting part of the work.

Most of my film (90% 120) was exposed and processed 40 and more years ago. Only rarely have I taken more than one shot of a subject. Therefore, I find varying results, but most of them don’t need re-converting.

Conclusion: Both kinds of users can profit from NLP and are

advised to learn how to handle the workflow, its source material and its finishing steps.

This is essentially what you might expect to do in the darkroom with the same filtration. There is another thread, already mentioned, where the OP felt that they weren’t getting the expected consistency using Roll Analysis on a group of images of this kind and as I understand it this is because Roll Analysis still processes each frame individually to a certain extent.

It seems that if you want consistency across such images then as alternatives to Roll Analysis you create a setup and then use that across a group of images, or alternatively there is also the new ‘Match’ option. With your invaluable experience using NLP over the years do you have any guidance on the best way to use NLP in these circumstances?

As an example, suppose I’m doing a series of product shots, or indeed portraits, in a studio with consistent flash lighting. I wouldn’t want the colour of the resulting pictures to vary according to the colour the of product, or the colour of the model’s clothing.

Let’s be blunt: two things can be “wrong”: NLP or the expectations.

Life (technical and otherwise) is about learning how to deal with what we get and/or cause.

The easy way is to photograph a ColorChecker Chart on the first frame. If your flash to subject distance and therefore exposure remains constant, all frames exposed like the chart was exposed will have the same color balance. Get the ColorChecker Chart looking right (I’m assuming you have a custom ICC monitor profile from a recent calibration), then copy those settings to all other frames exposed the same way. You may still have minor corrections to make, but overall, things will match better than with any form of automation.

There are variations on this approach, of course. If you just get an image looking right, and know that the following five images should look like it, too, then copy those settings from the “correct” image to all the other similarly exposed images.

In the school portrait industry, we used set lighting, manually set exposures, and exposure targets. Our color correctors used the target frame to adjust the initial color, then applied the same exact settings for the entire roll. This translated well to digital, too.

When it came to event work, however, most of that was done using Kodak’s DP2 Scene Balance Algorithm, which performed similarly to the automation tools in NLP. There was a LOT more tweaking of individual frames on those jobs (and they were priced accordingly).

I am both kinds of user, alternately. I have a large collection of negatives from before 2005. I occasionally convert rolls for other people.

You’re correct on both counts.

A lot of users just want a Magic Button they can push and have everything look perfect. That’s never going to exist! So long as there is some sort of auto evaluation and auto conversion going on, there will be variations that go out of bounds and require intervention. NLP and other systems are designed for the 80% of situations where automation works quite well. Tweaking is required for the other 20%.

I know that’s your angle but it should be perfectly possible to design software to switch off the automation for a batch of images so I think your expectations are lower than they need to be, I got the impression that Nate thought that as well.

Thanks but with respect I wasn’t asking about a Magic Bullet, nor how to arrive at an optimum result for any given studio situation. Instead I was simply looking for a means to switch off the ‘auto evasluation’ across a batch of images once you had succesfuly found the correct settings for one of them. This ought to be simple. I had thought from your post that you had been using NLP on batches of this type of image so might have a recommendation as to the best approach.

Oxymoron? NLP is about automating the conversion. Automation needs some guidance, it cannot (yet) read your mind for what the results should be, and this is something like “brightest light = 255, 255, 255” and “darkest shadows = 0,0,0”…

Deviating from these guides can be set by the clipping parameters, at least to a certain degree.

Leaving the brightest and darkest parts where they are (in the histogram) should be possible in general, whether the tools @nate uses in NLP can actually do it remains to be seen. One difficulty could be the non-linearity of the film’s colour characteristics that also vary from colour to colour.

We could also get a database filled with characteristics by stock, developed as prescribed. If development only varied a little, the values would be debatable, but still remain ballpark-ish. Lots of ifs to handle in software! And maybe more people could get happier with such a feature.

No, not an Oxymoron, and judging from other posts on here and on the NLP Facebook forum it is a ‘feature’ that people would like to have. With my ‘studio’ example the lighting and film camera exposure do not change and yet from what you say NLP will be thrown by the colour of the subject being photographed whether one uses Roll Analysis, Match, Sync or maybe copying saved settings simply because it can’t stop itself from analysing every single image. I don’t want to keep harping on about the darkroom but that is after all what colour negative was designed for. Colour analysers are not new, they can be used in the darkroom as well, a great aid at arriving at an excellent print from a particular negative though I never used one. However it would be madness to use a colour analyser to ‘correct’ a portrait of a person wearing a red shirt when they change to a blue one. That is what you would expect from a basic minilab output.

Still it seems that you must be right and this is just something that NLP can’t handle, so I thank you for your time in trying to explain this to me and I apologise if I’ve been reluctant to accept it.

No worries, we’ve had a good exchange imo.

And yes, darkroom work was different from what we have to deal with in digital, but B&W was - and still is - pretty straightforward.

![]()